- Introduction

- About the Stack I Used

- How Did I Build It?

- What Problems Am I Trying to Solve?

- What Were Some of My Design Decisions?

- What Did I Use to Solve the Problem?

- What Packages Did I Use to Solve the Problem?

- What Did I Learn?

- What Would I Change Next Time?

- Code Snippets

- Screenshots

- Exploring the Admin Section

- User Metrics Section

- Conclusions

- Comments

I built an A.I. Code Interviewer web app using the TALL stack. This app helps users prepare for coding interviews by offering personalized code challenges with real-time assistance from an A.I. chatbot. The app includes features like a sandboxed coding environment, a countdown timer for gamification, and XP points for tracking progress.

The TALL stack is made up of:

- Tailwind CSS: For styling the app.

- Alpine.js: For adding interactivity to the frontend.

- Livewire: For backend logic and handling data.

- Laravel: For dynamic, reactive components.

- Setting Up the Environment: Started with a Laravel project and integrated Tailwind, Alpine.js, and Livewire.

- Creating the Admin Section: Built an interface for users to create and customize code challenges. Challenges are categorized by difficulty and topic.

- A.I. Chatbot Integration: Connected the backend to the OpenAI API to enable the chatbot to assist users.

- Coding Sandbox: Implemented a sandboxed iframe where users can write and execute code, with an output terminal for results.

- Gamification Features: Added a countdown timer and XP points system to make practice more engaging.

- Metrics: Added AI powered metrics section to track user progress and performance.

The main problems I aimed to solve are:

- Lack of Real-Time Assistance: Many coding practice platforms lack instant help, which can be frustrating.

- Engagement and Motivation: Traditional coding practice can be monotonous, so I included gamification to keep users motivated.

- Customization of Challenges: I wanted admins to have the flexibility to create tailored challenges.

- User-Friendly Interface: Focused on making the admin and user interfaces intuitive and easy to navigate.

- Real-Time Interaction: Ensured the A.I. chatbot could provide immediate feedback by accessing the user's code and outputs.

- Secure Coding Environment: Used a sandboxed iframe to safely execute user code without risking security.

- OpenAI API: For the A.I. chatbot to provide intelligent assistance.

- Tailwind CSS: To create a clean and responsive design.

- Livewire: For dynamic components that update without page reloads.

- Laravel: To handle backend processes, data management, and API interactions.

- Spatie Laravel Packages: For managing roles and permissions.

- Livewire Components: For building reactive interfaces.

- OpenAI PHP Client: To interact with the OpenAI API.

- Integrating A.I. with Web Apps: Learned how to effectively connect and use the OpenAI API.

- Efficient Use of TALL Stack: Improved my skills in building interactive applications using the TALL stack.

- Importance of UX: Understood the value of creating a user-friendly interface to enhance the user experience.

- Metrics: Learned how to use metrics to track user progress and performance.

- Expand A.I. Capabilities: Explore more advanced features of the OpenAI API to provide even better assistance.

- Enhanced Gamification: Add more gamification elements to further engage users

- User Analytics: Implement analytics to track user progress and improve the platform based on feedback.

To illustrate some key parts of the implementation, here are a few code snippets:

Setting Up the A.I. Chatbot

php

Copy

use OpenAI\Client;

public function getLLMResponse(string $userInput): mixed

{

$openai = new Client(env('OPENAI_API_KEY'));

$response = $openai->completions()->create([

'model' => 'gpt-3.5-turbo-0125',

'prompt' => $userInput,

'max_tokens' => 150,

]);

return $response['choices'][0]['message']['content'];

}

Executing Code in Sandbox <iframe>

component

Copy

<iframe id="sandbox" src="sandbox.html"></iframe>

<script>

function runJsCode(code) {

var outputDiv = outputFrame.contentDocument.getElementById('sandbox')

// Intercept 'console.log' outputs and redirect them to the output div

var consoleLog = console.log

console.log = function(message) {

outputDiv.textContent += message + '\n'

consoleLog.apply(console, arguments)

}

try {

var result = new Function(code + '; return "";')()

outputDiv.innerHTML += result

} catch (error) {

// Display error message if code execution fails

outputDiv.textContent = "Error: " + error.message

}

}

</script>

Fine-Tuning for Optimal Challenge Management

The admin section of the A.I. Job Interviewer app is designed with robust features to empower administrators in managing and optimizing the user experience. Below are the key functionalities:

1. Challenge Dashboard

The challenge dashboard serves as a central hub for viewing, organizing, and managing all coding challenges. Admin users can:

- Track the number of active challenges.

- Filter and search for challenges based on criteria such as programming language, difficulty level, or category.

- Monitor usage statistics to identify popular or underused challenges.

2. Build LLM Prompt for Fetching Challenges

This feature enables admin users to finely tune prompts for fetching challenges from OpenAI’s API. By crafting precise and targeted prompts, admins can:

- Specify coding concepts, languages, or difficulty levels.

- Tailor queries to match the needs of users with diverse skill levels.

- Optimize the learning and problem-solving experience by generating challenges aligned with real-world requirements.

3. Import LLM Challenges

Admins can import coding challenges directly from OpenAI’s API with enhanced control over the process. Key functionalities include:

- Requirements Setup: Define the parameters for importing challenges, such as the target programming language, difficulty range, and specific topic.

- OpenAI API Connection Test: Validate the API connection to ensure smooth data retrieval.

- Automated LLM Challenge Completion: Automatically fetch and pre-validate challenges for accuracy and feasibility.

- Manual Challenge Completion: Review and approve challenges individually for greater oversight and customization.

4. Imported Challenge Settings and Customization

Once challenges are imported, admin users can adjust their properties to better suit the platform’s needs. This includes:

- Editing challenge descriptions, requirements, and instructions.

- Setting default time limits and XP rewards.

- Adjusting difficulty levels based on feedback and analytics.

- Analyze the imported Challenge answer provided also by AI.

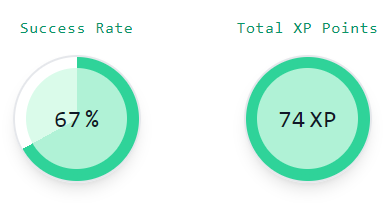

The user metrics section provides comprehensive insights into user performance and engagement, offering data-driven tools to enhance the learning experience. Key features include:

1. User Performance Statistics and A.I. Feedback

- Track individual user performance across completed challenges.

- Provide personalized feedback powered by A.I., highlighting strengths and areas for improvement.

- Summarize progress trends over time to motivate users and guide their preparation journey.

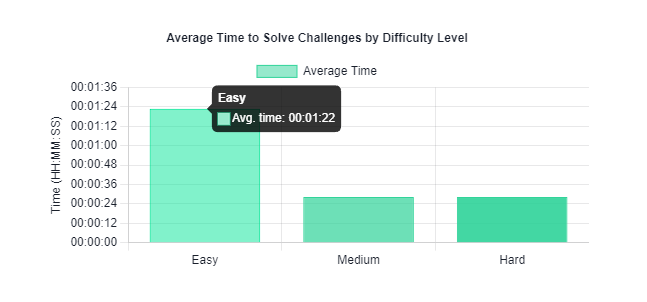

2. Challenge Difficulty Level Statistics

- Analyze how users perform across challenges of varying difficulty levels.

- Identify patterns to refine difficulty scaling and ensure balanced progression.

3. User A.I. Hint Usage Metrics

- Monitor how often users request hints from the A.I. assistant.

- Evaluate the correlation between hint usage and challenge success rates to optimize assistance features.

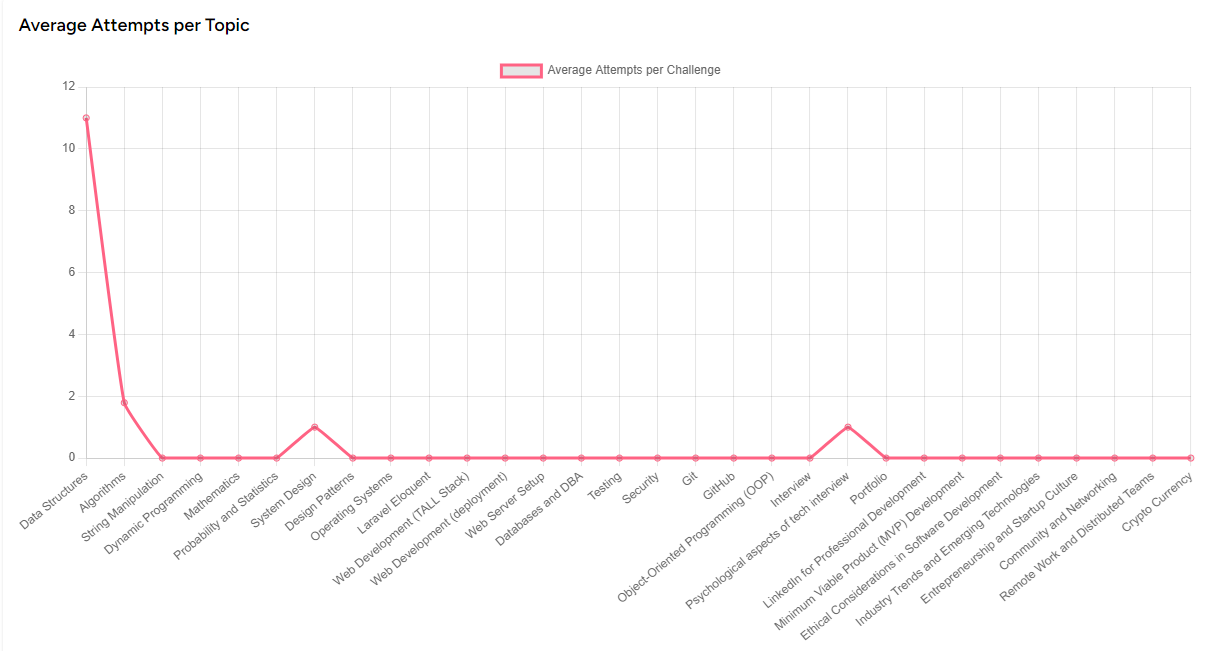

4. Challenges by Topics, Attempts, and Time-Based Metrics

- Categorize challenges by topic to identify user preferences and knowledge gaps.

- Track challenges based on the number of attempts, highlighting areas where users face repeated difficulties.

- Present time-based metrics to evaluate how quickly users solve challenges, helping gauge efficiency improvements.

5. Leaderboard and Comparison

- Display a leaderboard showcasing top-performing users based on XP, challenge completions, and time efficiency.

- Enable comparison tools for users to benchmark their performance against peers, fostering a sense of competition and community.

Some metrics screenshots

Building the A.I. Code Interviewer was a rewarding experience that enhanced my skills in the TALL stack and A.I. integration. This project not only solves practical problems for coding interview preparation but also makes the process engaging and effective. I encourage other developers to explore similar projects and experiment with the TALL stack and A.I. technologies.